The marvelously lightweight testing tool you never

knew existed

Spend less time documenting and more

time actually testing

So easy to use,

the learning curve is flat

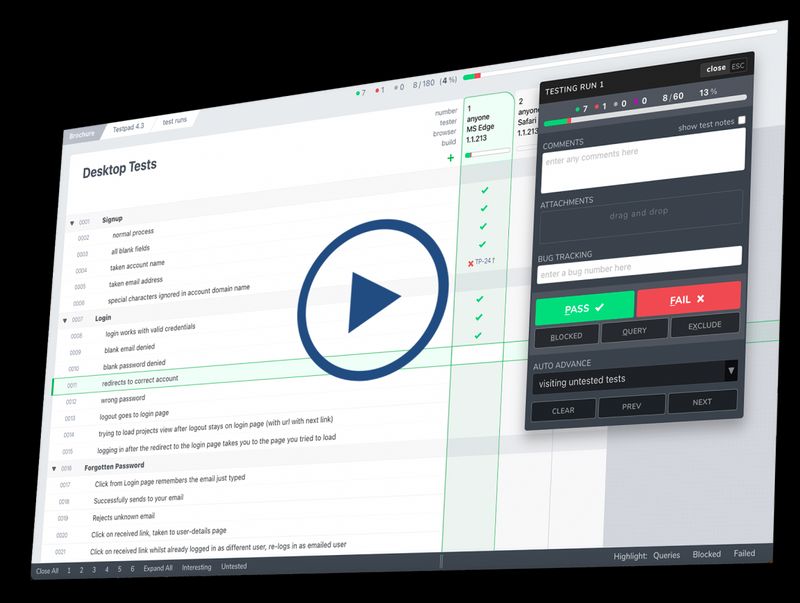

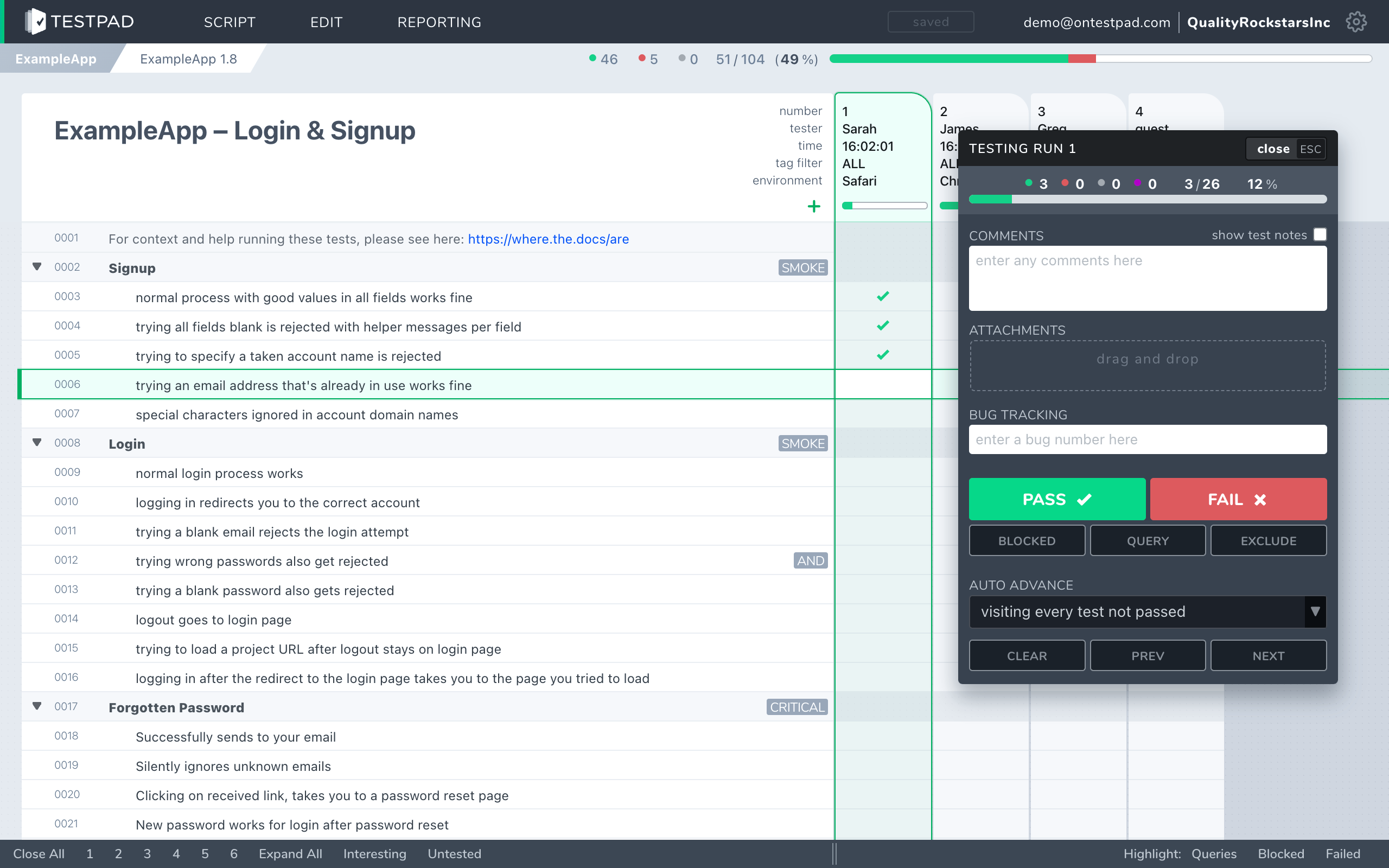

Anyone can test with Testpad. Our intuitive interface means that when it’s crunch time, anyone can pitch in and help test — from QA teams to managers, developers, outsourced testers, or strangers in your coworking office.

Guest testers don’t even need a login.

“We use Testpad to track all our testing. It offers the depth and flexibility to model our entire test plan, but remains simple enough that onboarding new testers is effortless. The import and export facilities are really helpful for migrating test plans from other test management tools.”

Eric Wolf

Senior Solutions Architect, Bell | Testpad customer for 6 years

Better than spreadsheets

& simpler than bloated TCM

Ditch complicated, buggy test case management tools. Stay organized without losing sight of what's next. Testpad's hierarchical checklists go deeper than your neighbour on mushrooms watching Inception.

When plans inevitably change, just drag and drop items where they need to go. Add, delete, and edit using the keyboard-driven interface. You’ll find your team is planning and testing more — literally without lifting a finger.

“In the past we used other test repositories but they were cumbersome and harder to use than a simple spreadsheet. For us, Testpad combines the visibility and quickness of a spreadsheet with a nice UI.”

Stewart Warner

Managing Director, Foxhole QA | Testpad customer for 4 years

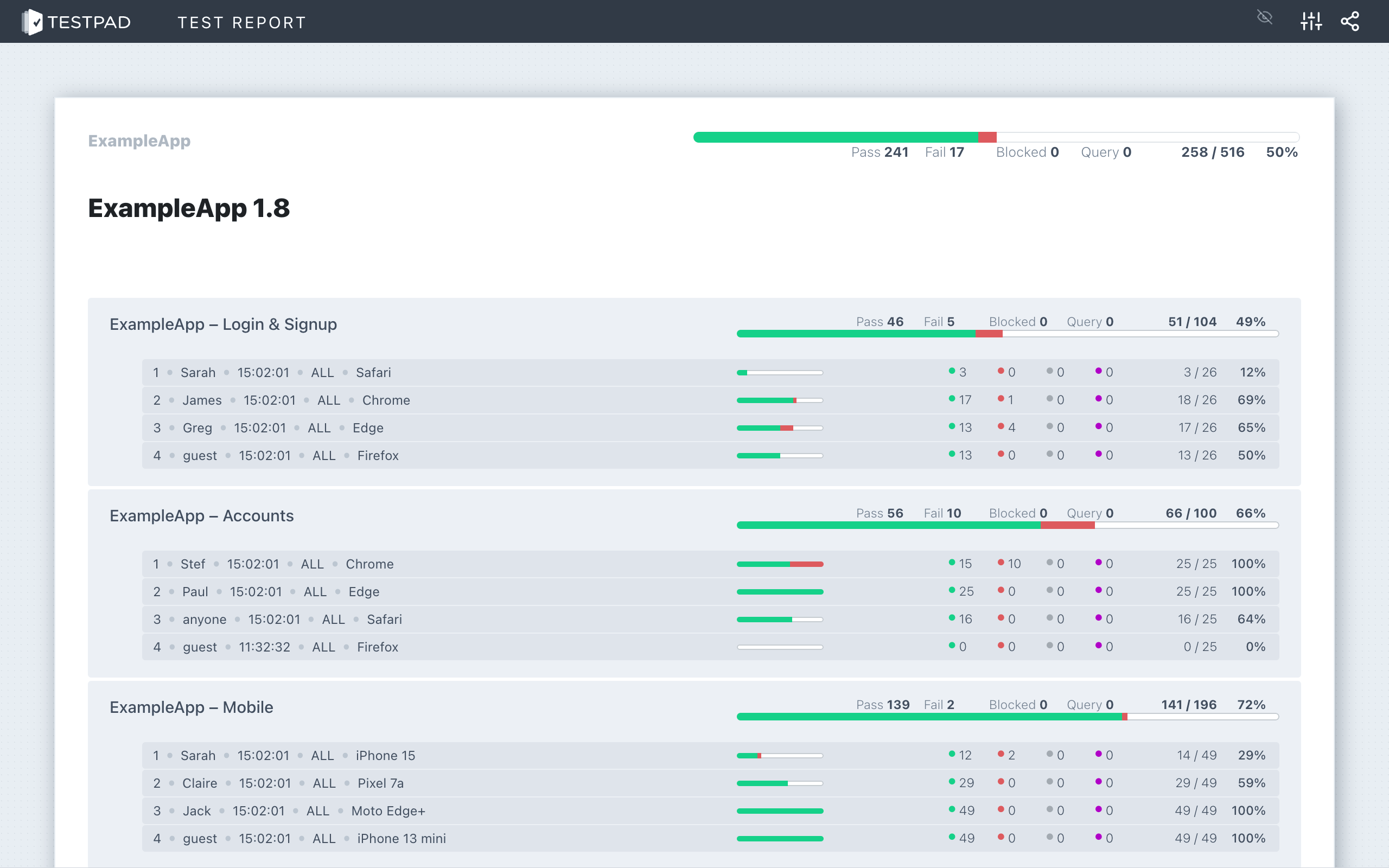

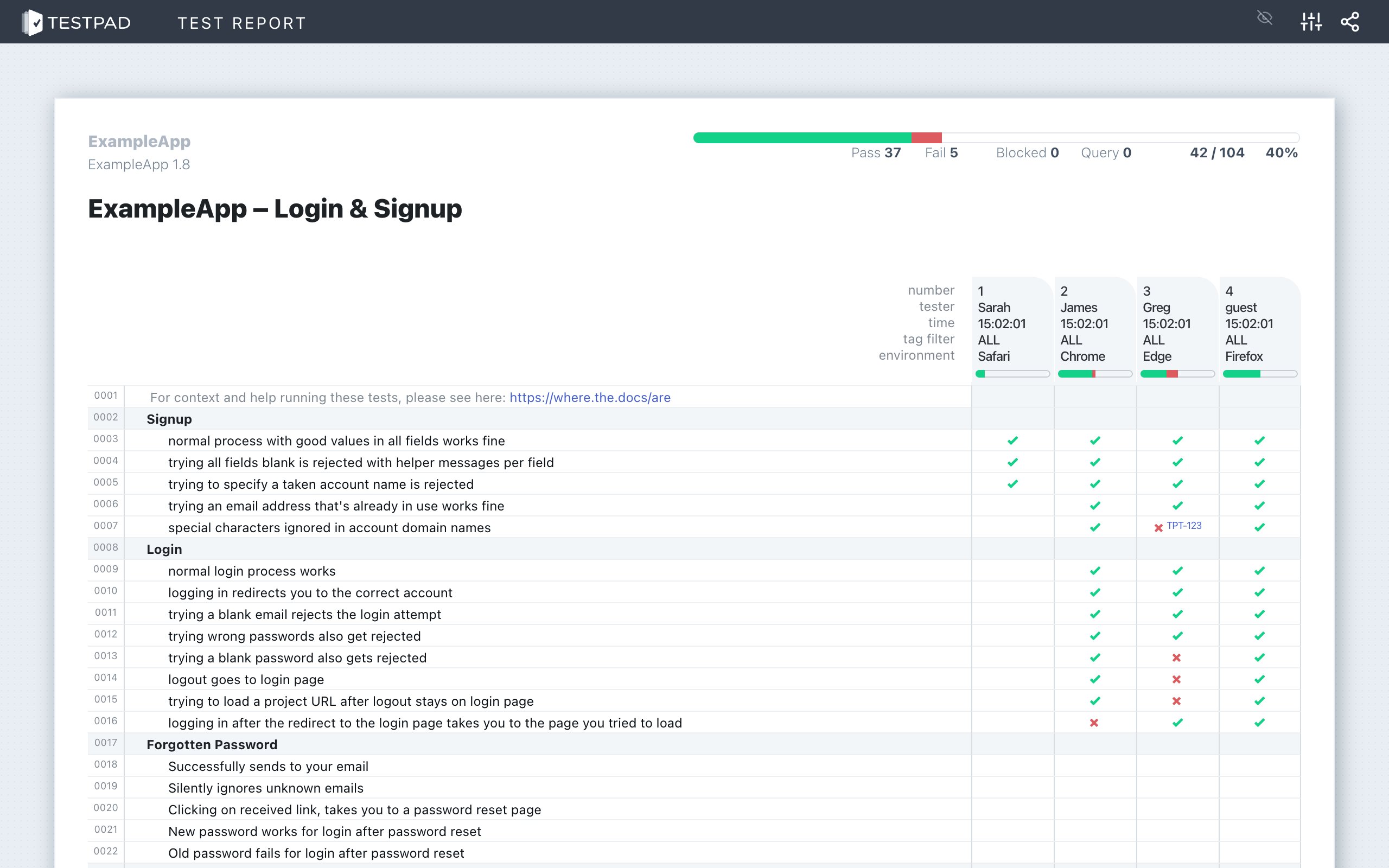

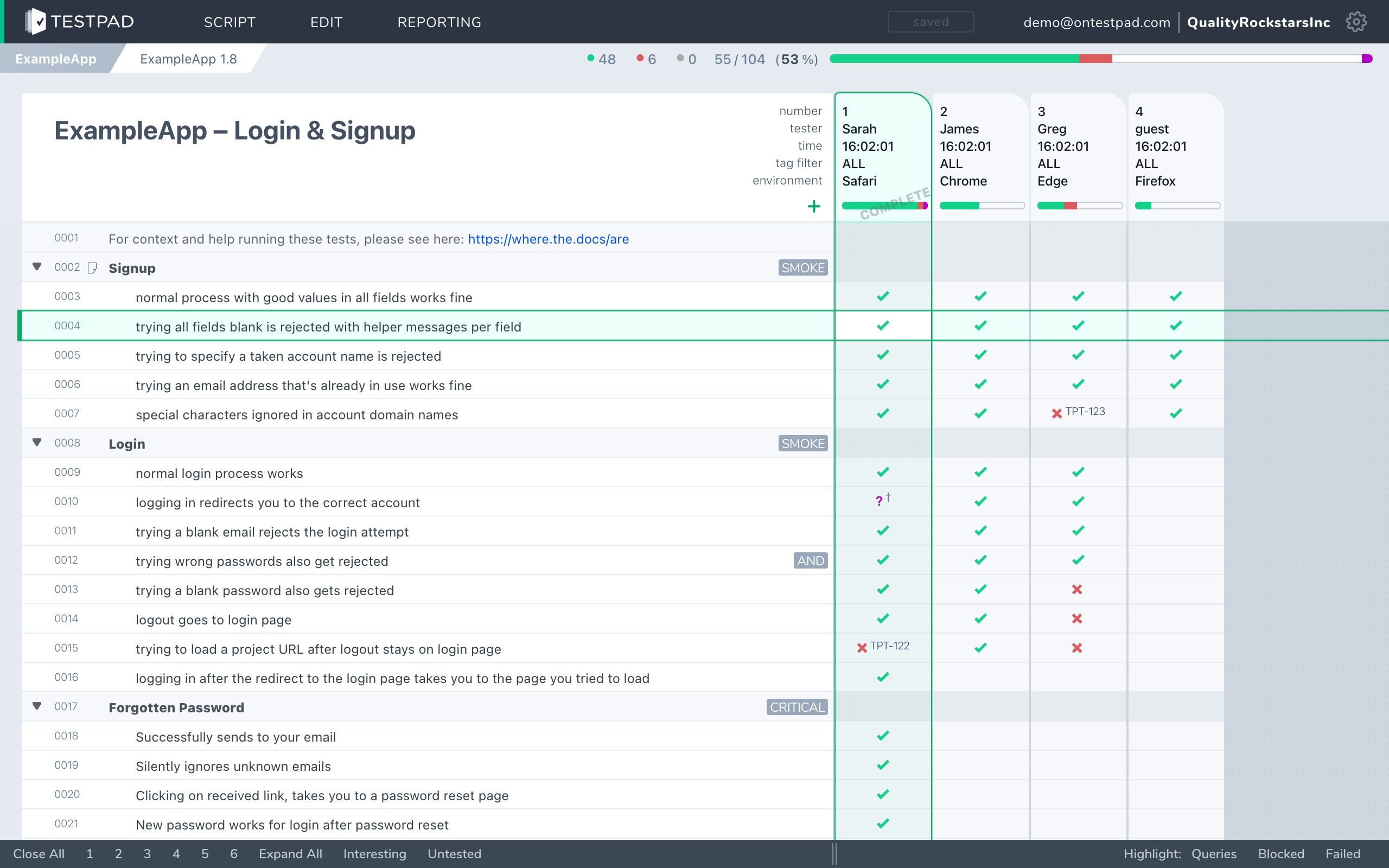

Are we ready yet? Reporting that actually gives you the answer

Get an instant visual overview of what's been looked at so far (and what hasn't). See where the problems are and get to fixing them faster.

Share links to live progress reports, or go old-school and leave a printout on your boss's desk. Power move.

USED DAILY BY HUNDREDS OF

Developers

Startups

QA Professionals

Developers

Startups

QA Professionals

However you like to run your QA,

discover a more pragmatic approach to testing

USE TESTPAD FOR...

Exploratory testing

When you don’t know what you don’t know

Exploratory testing is the best kind of testing. It’s making it up as you go along… with brain engaged. It makes space for humans to find unpredicted bugs, experiment with edge cases, or point out opportunities to improve. You should be doing it.

Regression testing

When breaking the same thing twice is embarrassing

Pro tip: you don’t need to wait until you can write fancy automated tests to start regression testing. With Testpad’s flexibility, you can build manual regression test plans as you go to make sure you don’t repeat mistakes from previous releases.

User acceptance testing

When you need to show off your work

Use Testpad to prove you met the brief. It's a transparent way for everyone to agree you've done a great job. No messing around with transcribing spreadsheets, or arranging for test tool training courses.

What you can and

can't do with Testpad

What you can do with Testpad

Start testing in 5 minutes

Organize scripts with folders and projects

Tag and Filter tests for when only subsets are needed

Bring in guest testers for extra help

Clickable links to bugs in JIRA et al

Attach images and files

Share progress reports instantly

Test using mobiles, with seamless handover

Edit tests during testing, because that's when you have all the ideas

Build templates for seamless re-use

And probably a lot more that our users have figured out and haven’t told us about

What you can't do with Testpad

Get bogged down in tutorials

Fret over whether you’re 'testing right'

Lock yourself into rigid test structures so you never test at all

Store test knowledge and progress inside one person’s head (lest they get pancaked by a city bus)

Bask in a false sense of security thanks to unhelpful process

Procrastinate adding more tests because it's too much effort

Wait 5 minutes every time you want to generate a new report

Enjoy the irony of finding bugs in the test management tool

14 years, 1000s of customers and

over 100 million test results

From Grandma’s garage to Github,

teams of all shapes & sizes love Testpad

Check out a few of our testimonials

Plan, test, and review more efficiently than ever

before. You’ll wish you’d started using Testpad sooner.

NO SOFTWARE DOWNLOADS

NO CREDIT CARDS

NO SHENANIGANS